🤖 Byeongjin Kang

Ph.D. Student @ Yonsei University | 3D Vision & Embodied AI

I am a Ph.D. student in Artificial Intelligence at Yonsei University,

in the V-Lab advised by Prof. EunByung Park.

My research focuses on 3D vision and robot learning, developing AI systems that perceive and understand the real world through 3-dimensional spatial reasoning from multimodal sensor data. I aim to create frameworks where 3D perception enables intelligent robotic behavior to solve complex real-world problems.

Previously, Research intern at RLLAB (Prof. Youngwoon Lee) and CSI Lab (Prof. Yusung Kim).

[ Publications ]

Coming soon...

[ Projects & Activities ]

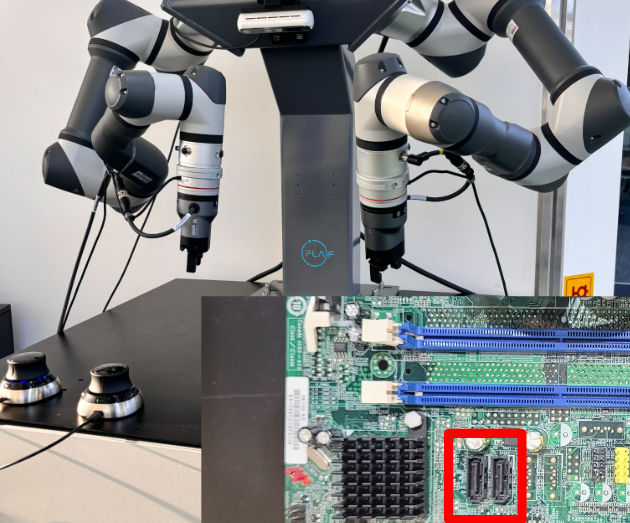

This project explored online reinforcement learning for a complex horizontal task using a main board and cables. While full success wasn't achieved, it provided valuable insights into real-time adaptation challenges and established groundwork for future online RL advancements.

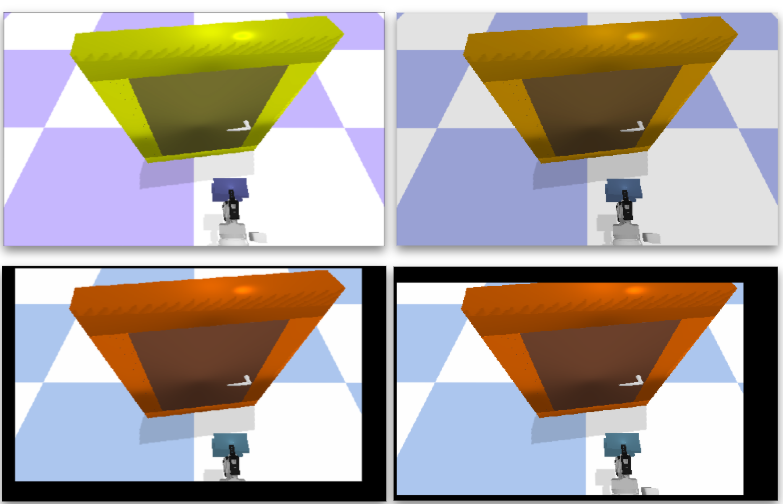

This project enhances robotic manipulation through imitation learning with visual robustness. Using multiple cameras and vision techniques, it handles lighting and color variations effectively. Our model achieved 80% success in noisy environments while baseine model(ACT) only achieved 10%.

This project develops a novel segmentation pipeline using cosine similarity and clustering for video lecture summarization. With only 500M parameters, we were able to handle 50k-character inputs without any collapse.